Written by María Navarro

Índice

Google provides a set of guidelines on what your website content should look like in order to appear in search results.

Within Google’s guidelines we find several categories:

- Webmaster Guidelines.

- General guidelines.

- Specific content guidelines.

- Quality guidelines.

In this article we will focus on the latter, but first we must know what they are.

In addition, implementing these prohibited techniques can lead to our website being penalized.

Quality guidelines

- Automatically generated content.

- Misleading redirects.

- Link diagrams.

- Concealment.

- Hidden text and links.

- Door pages.

- Copied content.

- Excessive use of keywords.

- Creation of pages with malicious behavior.

- Guidelines for spamming user-generated content.

Everything you are going to find in this article is published in the Quality Guidelines in Google support, but we wanted to make a recap of these specifications to help you quickly identify which techniques can negatively affect the positioning of your website.

Automatically generated content

Google aims to offer the user unique and quality content. Creating your own content entails a high cost in resources and time, so one of the easiest and most common practices is to plagiarize it or generate it automatically.

If Google detects automatically generated content, it may consider that you are trying to manipulate the positioning in the search results and apply a penalty.

The texts considered automated are the following:

- Nonsense text rich in keywords.

- Text translated by automated tools without human review and editing.

- Text generated by automated processes, such as Markov chains. To be understood, a Markov chain is a sequence of random variables.

- Text obtained by applying obfuscation techniques or generated with the use of automatic synonyms.

- Text generated by merging content from several web pages without any added value.

- Text generated from Atom/RSS feeds or search results.

Some common techniques for generating content automatically include translating content into other languages or scrap & spin (copying, fragmenting and recombining in a different order) text strings.

- The technique of translating texts is based on scanning content in a language other than your own, translating it and publishing it on your website.

- The technique of text spinning aims to extract existing texts from other websites and introduce syntactic variations that make them look like “new and original text”. This process requires manual work, since the syntax variations must be created, although there are also tools to automate this process.

Our recommendation is not to use automatic content generation methods on serious websites on which our brand or business depends. Although these actions may work momentarily, we run the risk of being penalized. Quality guidelines set the rules of the game and Google makes it clear that it doesn’t like content automation at all.

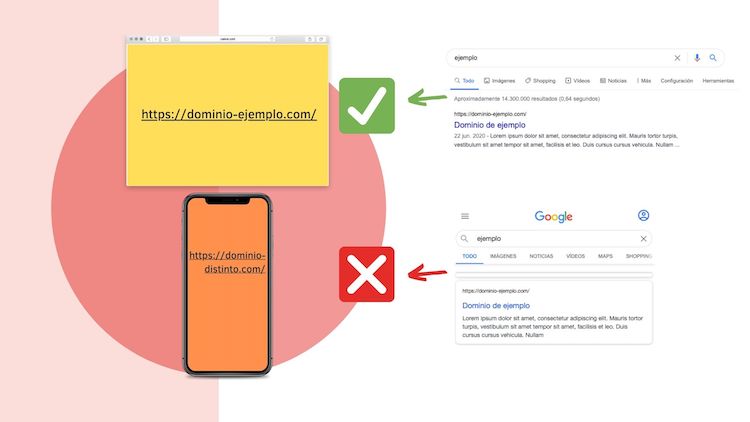

Misleading redirects

A redirect is an automatic forwarding by the server of one URL to another. There are many situations where a redirect is the best way to inform Google that a URL has changed and, in this case, it is permissible to do so. For example, when we find duplicate content in several URLs and we want to consolidate it into a single one or if a URL has changed and with the redirection we want to indicate which is the current one.

But there are cases where redirects are applied with the intention of deceiving search engines, displaying different content to users than to robots. These types of misleading redirects violate Google’s quality guidelines and we may be penalized if a detrimental user experience is detected.

It should be noted that some developers do these redirects consciously for a purpose, but there may also be cases where deceptive redirects on mobile are performed without the owners being aware, for example after a website attack.

Common example of misleading redirection: Let’s imagine that we perform a search and the same URL appears in the result, both for mobile and desktop devices. The user clicks on the result on the desktop device and the URL opens normally, so far so good. The problem is when the user clicks on the same result on a mobile device and instead of landing on the expected URL, a redirect is made to an unrelated URL. You will be able to understand it better in the following infographic.

Link diagrams

Another of the most common violations are links that are intended to manipulate PageRank. This type of link can negatively affect the website.

Some common examples of this attempted manipulation are as follows:

- Buying and selling links in order to manipulate PageRank.

- Free product submissions to get people to write about us or exchange services for links.

- Link exchange between portals.

- Automated links

- Market with articles with anchor texts and keywords on a large scale.

- Forcing a customer to include a follow link for offering a service. The most common case is when a developer includes in the footer or other web element “Developed by developer company name”.

The best way to get external links without being penalized by Google is to make other websites want to include links to our portal for the mere fact that it contains unique, relevant, useful and quality information. Therefore, this content will quickly gain popularity by the users themselves.

Cloaking

The content viewed by users and displayed to search engines must always be the same. If this were not the case it would be considered cloaking, a penalizable case for non-compliance with Google’s quality guidelines.

Examples of concealment:

- Configure the server to display one content or another depending on who is requesting the page. Manipulate content, insert additional text or keywords when search engine requests are detected.

- Show a page with Javascript technology, images or Flash to human users, while we show an HTML page to search engines.

In general, these cloaking techniques are less and less common, and search engines have evolved so much that they tend to detect and penalize them quickly.

Hidden text and links

We are again faced with a fairly common case: hiding content and links in developments. In many cases it is not done consciously, nor is it known that it is a method of manipulation, but it is and can bring negative consequences, so it is highly recommended to check if there is this type of hidden content and fix it.

Here are some common content hiding techniques:

- Use CSS to include hidden text with display:none or, for example, to include text off-screen so that users cannot see it.

- Include white text on white background.

- Include text behind images.

- Set the font to 0px so that it is not displayed.

- Hide links in a single unobtrusive character or hide them by other CSS methods.

We must bear in mind that hidden content is not always punishable. There are exceptions usually related to accessibility improvements.

If your site uses technologies that make it difficult for search engines to crawl, such as images, Javascript or Flash, it is advisable to add descriptive text to make it easier for them to do so.

Users may also benefit from these descriptions if for any reason they are unable to view this type of content.

Examples for improving accessibility:

- Images: Add “alt” attribute with descriptive text.

- Javascript: Include the same Javascript content in a tag <noscript>.

- Videos: Include descriptive text about the video in HTML.

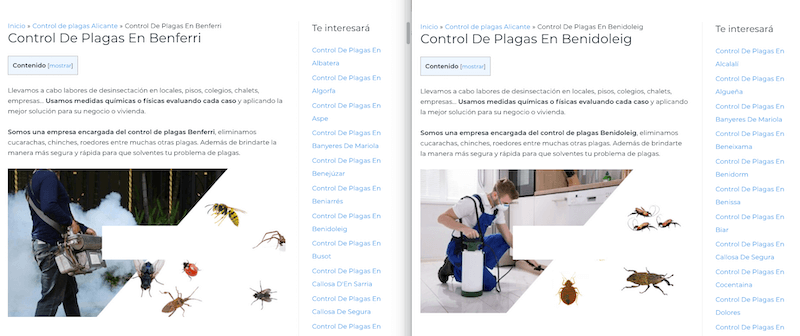

Door pages

Doorway pages are websites or pages created solely for the purpose of positioning them in the search engine for very specific results, with one or more keywords. Generally this type of landings run the risk of being penalized by Google because it does not consider them good for the user, since they are going to be very similar results.

These pages are usually created to channel user traffic to the website or home page and are oriented to search engine rankings, but not to offer a quality result to users.

They tend to be low quality pages that do not offer added value to users, in addition to using automated content with slight variations.

The most common use is the creation of doorway pages to try to position services by city names, for example: “Pest control Murcia”, “Pest control Alicante”…

Some examples of doorway pages:

- Pages to channel visitors to the main, useful or relevant page of the website.

- Pages of similar content that are closer to search results than to a clearly defined searchable hierarchy.

- Have several pages or domain names oriented to specific regions or cities to channel the user to a page.

To give a recommendation it is necessary to evaluate each case individually. However, if we are penalized for this reason, it must be rectified as soon as possible.

Copied content

As we mentioned in the automated content section, we know that generating content is an arduous task that requires a lot of resources to make it of high quality. Just as automated content is punishable, so is copying content from other websites.

Moreover, in this case, copyright is infringed and we can be reported for it.

Examples of copied content practices:

- Sites that copy and paste content from other websites without providing any added value.

- Sites that copy and paste content from other websites modified slightly, for example, with synonyms or automatic techniques.

- Sites that reproduce content feeds without providing benefit to the user.

Excessive use of keywords

Keyword Stuffing is one of the oldest practices in SEO. Although it worked years ago, it no longer does. Currently, the practice of including excessive keywords in content, links, metadata, etc. violates quality guidelines and is punishable. We recommend not using this method and instead focus on generating content that includes keywords and synonyms in the right measure, in an appropriate, natural way and in the right context.

Creation of pages with malicious behavior

The creation of pages that behave differently than expected by users, that impair their experience when navigating the website and with a malicious intent, is clearly another way of violating Google’s quality guidelines.

This point is much easier to understand if we look at some examples that are considered malicious behavior and that in many cases you will have experienced:

- Installation of malicious software on your computer such as Trojans, viruses, spyware… I dare say that practically all of us have experienced this at some time or another.

- Include unwanted files in the download requested by the user.

- Confuse the user into clicking on a button or link that does not actually do the function the user thinks it does.

- Changing search preferences or the browser’s home page without having informed or obtained the user’s consent.

Guidelines for user-generated spam

All the points seen above had to do with intentional manipulation techniques created by the website owner himself. Sometimes, it can also be the users who have bad intentions and generate spam on a quality site.

Usually this problem arises on pages that allow you to add content in some way or create pages for the end user.

The main cases of user-generated spam are in:

- Spam in blog comments.

- Fraudulent chain posts in a forum.

- Fraudulent accounts on free hosts.

Pages full of spam give a bad impression to users. It is advisable to disable this function if it is not useful to users or if you do not have the time to regularly monitor posted comments.

To avoid this type of spam we recommend:

- Enable comment moderation and profile creation.

- Use tools to avoid spam (Honeypot, reCaptcha).

- Use in rel=”nofolow” or rel=”sponsored” links.

- If the website allows you to create pages such as profile pages, forum threads or websites you can use the noindex meta tag to block access to pages from new or untrusted users. You can also use the standard robots.txt to block the page temporarily: Disallow:/guestbook/newpost.php

Our recommendation

Although positioning a website may seem an arduous, costly and long-term job, falling into the temptation of applying black-hat techniques, especially if you are inexperienced, can lead to penalties from the search engine. It is advisable to frequently read Google’s quality guidelines to ensure that your website respects them and, above all, to be alert to new recommendations made by the search engine.