Written by Ramón Saquete

Index

The technique simply consists of compressing the information on the server before sending it to the client browser that requested it, saving time in the transmission of the data over the network. To compress the information you can use the gzip format or, alternatively, deflate.

Gzip is an open compression format developed by the GNU project. Like most lossless compression algorithms, it reduces file size by replacing frequently occurring strings with shorter strings. Specifically, the gzip and deflate formats, are based on the LZ77 algorithm created in 1977 by Abraham Lempel and Jacob Ziv, which creates a dictionary with the most repeated strings and replaces them in the file by Huffman codesThese codes are univocally decodable binary sequences (they can be read without separators) and are shorter for the most frequent strings.

Why is it so important?

This WPO technique is one of the easiest to apply, since it does not require any code modification, while on the other hand it obtains a remarkable performance improvement, since it considerably reduces the amount of information that each client must download to view the page.

Its use is especially important if we have embedded images in style sheets or in the HTML code through Data URIs, since when converting images to text their size increases and, with gzip compression, they remain as they were.

How does it work?

To be usable, both the client and server must support gzip compression. Browsers that are (all those that are not more than 10 years old), send the following parameter in the header of their HTTP/1.1 protocol requests:

GET / Host: www.humanlevel.com Accept-Encoding: gzip, deflate

With this header, the client is telling the server: give me the home page of www.humanlevel.com, you can send it to me compressed using the gzip or deflate algorithm.

The server may or may not respond with the compressed content when it sees this request. If returned compressed it will include the following parameter in the response header:

Content-Encoding:gzip

With this parameter, the server is telling the client: I am sending you the page compressed with gzip. In this way, the client knows that it has to apply this algorithm to decompress it. When the header does not appear, it means that the content is uncompressed.

Normally, compressed content always comes in gzip. The other alternative compression algorithm is deflate, but it is never used, since it compresses less and browsers that decompress deflate also decompress gzip, but not the other way around, since gzip is based on deflate.

Google Chrome sends in Accept-Encoding a Google proprietary compression format, SDCH (Shared Dictionary Compression over HTTP), but there are still no Web servers that compress in this format, nor does there seem to be any interest in there ever being one, since it was already proposed several years ago.

The server should additionally include the following parameter next to the previous one:

Vary:Accept-Encoding

This parameter is aimed at intermediate proxy servers and serves to solve the following situation: suppose a browser that cannot use gzip makes the first request and the page is stored uncompressed in the proxy cache, the following responses will all be uncompressed even if the browsers request it in gzip. If the first request is from a browser that can use it, the following responses will all be compressed even if the browser cannot decompress and display them to the user. With this parameter we tell the proxy to cache different versions when the value of the Accept-Encoding parameter in the browser request varies, solving the problem.

Although using gzip involves a additional CPU coston the server to compress and on the client to decompress, the gain of performance we achieve information faster over the network, it is much more efficient, and theThis is not only because it takes up less space, but also because we will obtain fewer data packets into which the information will be split when it is sent and reassembled at the destination.

What should we take into account in the configuration?

First of all, we must be clear about what should be compressed and what should not be compressed, since compressing certain files will result in a loss of performance.

We must apply compression to those files that are not already compressed. I am mainly referring to HTML, JavaScript, CSS, XML and JSON text files, whether they are generated statically or dynamically. By doing this, on average, we will be able to save between 60% and 70% of the size of these files.

If we try to compress files that are already compressed, we will slightly increase the size of these files. In this case, I am mainly referring to JPG, GIF or PNG images and PDF files. This, together with the CPU overhead for the server to compress them and the client to decompress them, will increase the loading time instead of decreasing it. This is due to the fact that at a certain point, the information cannot be compressed any more, so that when we try to compress it again, we make the file larger by including a new header necessary for its decompression.

The size of the file must also be taken into consideration. It is not worth compressing a file of less than 1 KiB.It is quite likely that when sent over the network, the information will never be segmented, taking the same time to be transmitted, whether it is compressed or not, with the extra CPU cost due to working with compressed information on the client and on the server. It is not easy to establish what should be the minimum file size needed to perform the compression, since it depends on multiple factors of the networks it crosses to reach its destination, but in general, it is usually worse for performance to compress files smaller than 1 or 2 KiB.

Sometimes the server can be configured to keep a cache of the compressed files, so that they do not have to be compressed again at each new request. When its corresponding uncompressed file changes, the original file will be updated.

Another interesting option that we can find is to regulate the compression level, so that by increasing the CPU cost to compress the files, we can make them occupy less, or on the contrary, decrease the CPU cost at the cost of occupying more files. The setting of this option will depend on the CPU usage of the server in each case.

How is it configured?

Let’s take a look at the most important parameters of the most common Web servers:

Apache 2.x

In Apache 2.x we must activate the mod_deflate module (although it is called deflate it uses Gzip) and set the configuration as follows:

| Parameter | Action |

| SetOutputFilter DEFLATE | Enable compression |

| AddOutputFilterByType DEFLATE text/html text/css application/x-javascript | Set MIME types of files to be compressed |

| DeflateCompressionLevel 5 | Set compression level (between 0 and 9) |

Setting the files to be compressed with the MIME type will always be better than specifying the file extension, as it will apply compression even if the file is dynamically generated.

Apache 1.3

In previous versions of Apache, from 1.3 backwards, we must activate the mod_gzip module and set the configuration as follows in the httpd.conf file (if it is at server level) or in the .htaccess (if it is at directory level):

| Parameter | Action |

| mod_gzip_on Yes | Activate the module |

| mod_gzip_item_include file \.js$ | Enable compression for files with .js extension |

| mod_gzip_item_include mime ^text/html$ | Enable compression for files with MIME HTML type |

| mod_gzip_minimum_file_size | Sets the minimum size necessary for the file to be compressed. |

| mod_gzip_can_negotiate Yes | Provides that compressed files may be served |

| gzip_update_static Yes | Cached gzip files are updated automatically and not manually |

PHP

From PHP you can also enable compression with the following option in the php.ini file:

zlib.output_compression = On

If we have compression already enabled with an Apache module (which is preferable), we should not do this because the configuration will conflict.

Internet Information Serve r7

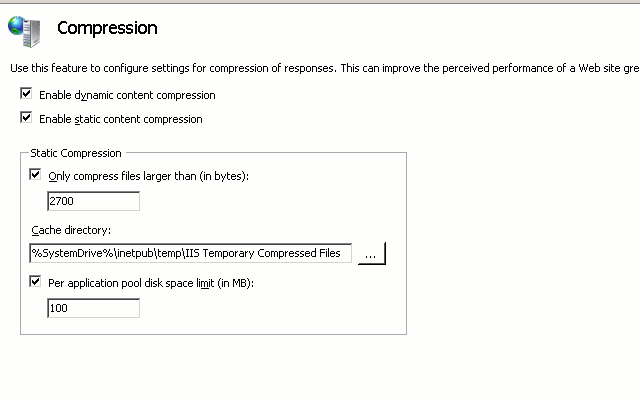

In IIS7 we have the following configuration screen that appears when clicking on compression within the general options:

Then inside the Web.Config file we must set the following values:

<system.webServer>

<urlCompression doStaticCompression=”true” doDynamicCompression=”true” dynamicCompressionBeforeCache=”true”/>

<httpCompression directory=”%SystemDrive%\inetpub\

temp “tempIIS Temporary Compressed Files”.>

<scheme name=”gzip” dll=”%Windir%\system32\inetsrv\gzip.dll”/>

<dynamicTypes>

<add mimeType=”text/*” enabled=”true”/>

<add mimeType=”message/*” enabled=”true”/>

<add mimeType=”application/javascript” enabled=”true”/>

<add mimeType=”*/*” enabled=”false”/>

</dynamicTypes>

<staticTypes>

<add mimeType=”text/*” enabled=”true”/>

<add mimeType=”message/*” enabled=”true”/>

<add mimeType=”application/javascript” enabled=”true”/>

<add mimeType=”*/*” enabled=”false”/>

</staticTypes>

</httpCompression>

<urlCompression doStaticCompression=”true” doDynamicCompression=”true”/>

</system.webServer>

The parameters doStaticCompression and doDynamicCompression refer to the fact that you must compress both static and dynamic files.

I hope it is clear what is the correct way to configure the gzip compression, what about your website, does it have the gzip compression configured correctly?