Written by Jose Vicente

Google announced yesterday, through its Google Webmaster Central Blog, the update of some of its technical guidelines. These guidelines affect the way Google crawls the content of websites and has an effect on their ranking.

If up to now Google crawled a website in a similar way as a text browser (e.g. Lynx), now Google crawls web pages in a more human-like way, taking into account also information about the design style of the page or functionalities implemented through JavaScript programming. In other words, Google’s robot has gone from simply crawling the HTML of the page to rendering the HTML using CSS and JavaScript code.

To check if your website follows Google’s new technical guidelines you can perform the following checks:

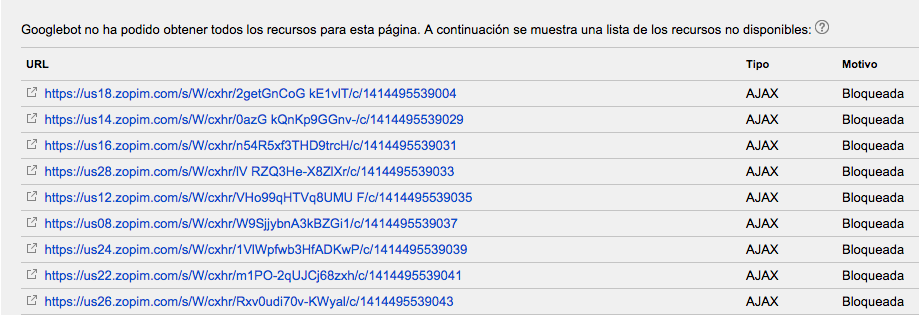

- Review the robots.txt file configuration to ensure that search engines are not blocked from accessing the cascading style sheets (.css) or Javascript (.js) files, as Google now needs this information to render each page correctly.

- Optimization of content linked to web pages: cascading style sheet (.css) files o JavaScript (.js) etc. to ensure that they are optimally bundled (i.e., require fewer calls to the server which favors downloading); that the code is minimized (no blank lines or unnecessary spaces, comments or any other non-essential information); and that the Gzip compression on the server also for this type of files (as the information is transmitted compressed, it travels faster to the users).

- Download speed data in Google Search Console to ensure that the new Googlebot crawling method does not affect content indexing.

- The compatibility of the website with mobile devices and the blockages in the rendering that may be caused by the JavaScript with Google’s Page Speed tool.

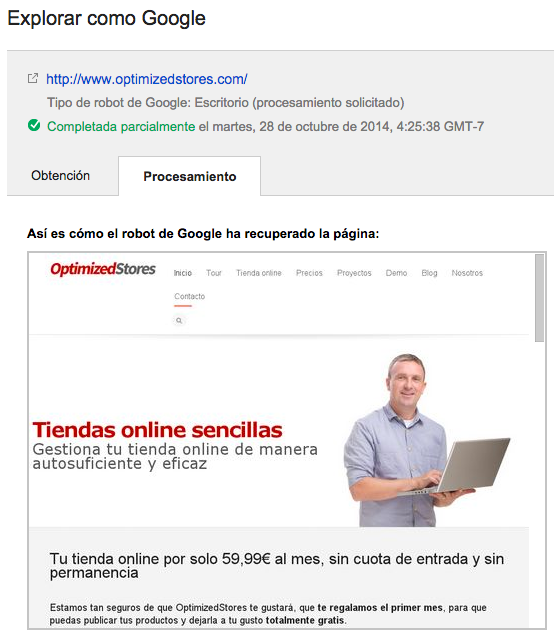

- Check how Google renders and crawls content using the “Crawl as Googlebot” functionality – get and process option – in Google Search Console which has been adapted to test the new technical guidelines.

We must keep in mind that in no case a lack of any of these guidelines could lead to a Google penalty.

And that on many occasions we will not be able to act on resources external to our website over which we have no control. These are guidelines that should be detected and implemented as far as possible to ensure optimal positioning in Google.