Written by María Navarro

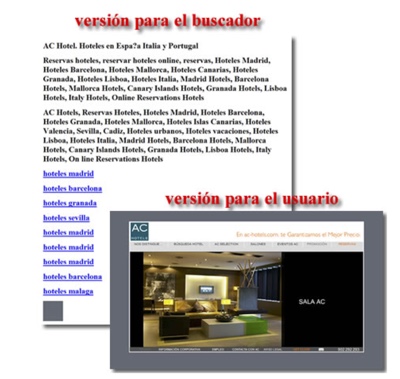

In SEO, it is called cloaking to a set of cloaking techniques whereby a website’s server or programming is configured to present content to users that is different from what it would present to search engine bots.The objective is to achieve better positioning for certain keywords.

Cloaking is a so-called black-hat technique because it violates Google’s webmaster quality guidelines which specify that its robot or spider should always access exactly the same content that users can see when they visit the website.

Techniques used for cloaking

Some of the techniques traditionally used to disguise content consisted of configuring the server so that, depending on the browser requesting the page, it would return the real content or content specifically created to try to trick the search engine. This configuration detected when Google’s robot – Googlebot – was requesting the page through the user-agent using this specification to discriminate between human and search engine visits.

Other techniques to disguise optimized content involved the use of JavaScript redirects that could display different content in the user’s browser than for search engines (which until recently did not execute such code), the delivery of different content depending on the source IP of the request, or the use of a Meta Refresh pointing to a URL different from the original one with an actuation time (content attribute) close to 0 seconds.

The use of some of these techniques is easily detectable by checking what content we get if we browse the website with Javascript disabled or without accepting cookies using browser extensions such as Web Developer.

Cloaking in today’s SEO

Currently, cloaking techniques are in disuse in SEO due to the ease with which search engines are able to detect the use of the techniques employed to do so, as well as the fact that over-optimized content – content with a high concentration of keywords – is also increasingly easily identified and penalized.

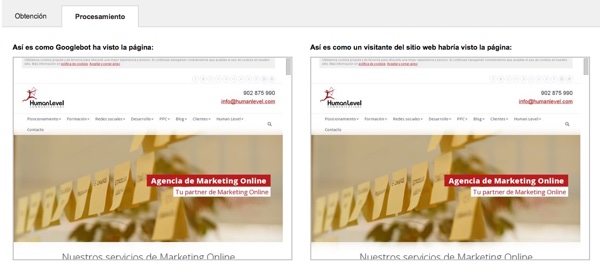

The robots used by search engines are now also capable of executing Javascript code and making comparisons between how human users perceive pages and how search engines interpret them. With the functionality > Crawl as Google from the Google Search Console we can check if our server or web application performs some kind of cloaking technique.

When using dynamic HTML as a solution to serve content optimized for mobile terminals, Google indicates that an HTTP header must be included to make it explicit that the server code will be varied by the server depending on the browser (user-agent) so that the search engine knows that we are not trying to cloak.