Written by Ramón Saquete

If you are a Web developer, after reading the series of articles I am going to develop, you will know how to implement a search engine in a Web, beyond the typical and inefficient search engine that only looks for matches in the database and does not provide results ordered by relevance. If you are an SEO consultant, you will have a better understanding of the inner workings of search engines.

In order to reach as many readers as possible, I will try to express myself in the least technical way possible, however, and since this is a high-level subject, in some cases I will use mathematical formulas. In these cases, those who have not mastered them can jump directly to the explanation. However, those of you who are skilled in this area will have a much deeper understanding of what I am explaining, since a formula can be worth a thousand words.

Let’s start by putting ourselves in the picture. Within Computer Science, the field of Artificial Intelligence is divided into many branches such as: Shape Recognition, Robotics, Computer Vision, Natural Language Processing, etc. Here we are going to focus on one of the applications of Natural Language Processing, the Information Retrieval Systems, which from now on I will call by its acronym IRS. This, as well as other applications of this branch, such as Machine Translation, Response Search Systems, Dialogue Systems or Information Extraction Systems, represent for the researchers who develop them, areas in which it is necessary to specialize, since they, in turn, are divided into many quite complex sub-problems. On the other hand, there is the area called Web Intelligence, which consists of applying Artificial Intelligence techniques to a Web.

What is an IRS?

IRS are tools that, based on a query, are able to choose from a huge collection of documents, the ones that respond to that query and sort them by relevance.

If you look for scientific articles about Information Retrieval you will find much more information than what I am going to give here, but of course, usually with less didactic explanations. Google has several articles on IR, from its researchers, published in:

https://research.google.com/pubs/InformationRetrievalandtheWeb.html

To be able to perform the colossal feat I have described, over millions of documents and in a few seconds, simplifying a lot and without taking into account many technical aspects, the following tasks are carried out:

- Indexing:

- The documents are first pre-processed to analyze them and apply transformations that later improve the search.

- The weights of the terms extracted in the previous step are then calculated and efficiently stored in an index.

- Treatment of the consultation:

- The query is analyzed to represent it internally in a way that allows it to be compared with the documents.

- Comparison between consultation and document collection

- A value is obtained that indicates the relevance of each document for the query and that will allow them to be sorted.

In this article I will explain only the Indexing phase and in the following article the other two phases.

Indexing

Document pre-processing

As I have already mentioned, the document must first be analyzed. To perform this task, the HTML tags are removed, extracting their meaning first, in order to know the importance that these tags contribute to the words they contain.

In the process, the number of occurrences of each word is counted, since, based on this value, the importance of the word in the document will be calculated, since if it appears more times, it is more likely that the document is more relevant for that word.

At the same time, the position of the word in the document is usually calculated, since if the words we are looking for are closer together in the document, it is more likely that it is a relevant document for the query. So having the position stored, we can calculate the distance between those query words in the document, to take it into account.

Some IRS instead of indexing words index their root, in order to consider derivations of the same word (e.g. verb tenses) as repetitions of the same term. The calculation of the root is usually done by some heuristic algorithm that tries to guess the correct root. The most widely used in English is Martin Porter’s Stemmer, which has its Spanish adaptation in the Snowball software.

The strategy of indexing the root of words has the problem that synonymous words are considered distinct words, so other IRSs group the frequency of occurrence of words by meaning. To do this, it is necessary to solve one of the most difficult and studied problems in natural language processing, the WSD (Word Sense Disambiguation), which tries to disambiguate the meaning of words by their context.

Another strategy is to index sets of two or more words instead of single words, to much better refine the user’s search intent. As this requires a much larger index size, only the most frequent word sets are taken into account in these cases.

What does Google do? No one knows, you may use all of the above strategies or you may use none of them and use n-grams instead. What we can tell from the search results is that it takes into account synonyms and the closeness of the query keywords, functionalities that can be achieved in many different ways.

There is also one thing that, looking at the results, we can know that Google does not do, which is to eliminate stop words. Stop words are words that have a very high frequency of occurrence in documents, such as articles and prepositions. Since these are words that do not provide relevant information about the document and significantly increase the size of the index, they are usually discarded in all IRSs. Google does not remove them because they can sometimes provide information to the query, for example “country” and “the country” are searches with different intent.

Calculation of the weight of words or terms

In IRS, the vector space model is normally used to represent documents and queries, because it is the one that obtains the best results. This means that we will represent documents and user queries numerically in an n-dimensional vector space, with as many dimensions as there are words. If for the moment you don’t understand the previous sentence, don’t worry, in the next post I promise to explain it in more detail. What matters now is that we are going to calculate the weight or importance that each word has when returning results sorted by relevance. Gerard Salton’s formula, called TFIDF (Term Frequency – Inverse Document Frequency), is normally used for this task. This formula is calculated by multiplying the frequency of the term in the document by the inverse frequency of the term in the document collection, which I will explain below.

It is logical to think that if a word appears more times in a document, it will make that document more relevant in a query containing that word, so the first element of the formula is the frequency of the term in the document, which we can use as we obtained it in the previous step or normalize it by dividing by the frequency of the term that appeared most often in the document. In this way the term frequencies of all documents will always be between 0 and 1. This will prevent long documents from taking precedence over short documents, although in practice long documents will always have slightly more weight.

Formula:

In the denominator we have the maximum of all the frequencies of the w terms belonging to document d.

For anyone interested in digging deeper, this is not one of the best ways to normalize for fairer results, there are much better non-linear normalizations, such as Amit Singhal’s pivoted normalization.

If the word appears in many documents in the collection, that word will be less determinant to return relevant results and if it appears in few documents, it will make those documents more relevant. This is the idea of the inverse frequency of the word in the document collection and is calculated by dividing the number of documents in the collection by the number of documents containing the term. A logarithm is applied to the result to make the high values not grow too much and thus normalize them a little.

Formula:

Finally, we can calculate the TFIDF which will finally give us the weight of the word. This is the importance of the term in deciding the search results, which is given by the importance of the word t, in document d, by the importance of the word in the collection D:

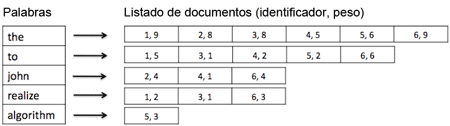

Now we have all the necessary information to elaborate the inverse index, which will be composed of a list of words and, for each one, we will have a list of identifiers of the documents where they appear together with their weights (TFIDF).

In this way, when a word arrives, we will be able to quickly know which documents it is in and what weight the word has in them, so that we can elaborate a formula to order the documents by relevance. This formula and several other things, I will finish explaining them next month in my next post, I hope you do not fail to read it and comment on it.